“The NSA is basically applied data science.”

Jason Cust

The European Court of Justice declared the EU directive on data retention void the same day #hartbleed caused the grosset panic about password security the Net had seen so far.

This tells one story:

Get out into the open! Stop hiding! There are no remote places. No privileges will keep your data from the public. No genius open source hack will protect your informational self determination. And spooks, listen: your voyerism is not accepted as OK, not even by the law.

We’re living in a world with more transparency, we need to learn to do intelligence with more transparency too.

Bruce Schneier, #unowned

I was fighting against Europe’s data retention directive, too, and of course I was feeling victorious when it was declared void by the ECJ. But, don’t we know how futile all efforts are, to keep data protected? No cypher can be unbreakable, except the one-time-pad (and that is just of academic interest). No program-code can be proven safe, either – these are mathematical facts. Haven’t you learned how wrong all promisses of security are by #heartbleed? Data protection lulls us into feeling save from data breeches, where we should rather care to make things robust, no matter if the data becomes public intentionally or not.

The far more important battle was won the same week, when the European Parliament decided to protect net neutrality by law. This is the real data protection for me: protecting the means of data production from being engrossed in private.

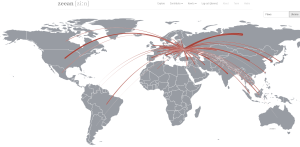

The intelligence agencies’ damaging the Net by undermining the trust of its users in the integrity of its technology, is a serious thing by inself. However burrying this scandal just under the clutter of civil rights or under constitutional law’s aspects will not do justice. It is of our living in societies in transition from the modern nation state to the after-modern … what ever, liquid community; into McLuhan’s Global Village which is much more serious and much more interesting. We will have to go through the changes of the world and it might not be nice all the time until we come out the other end.

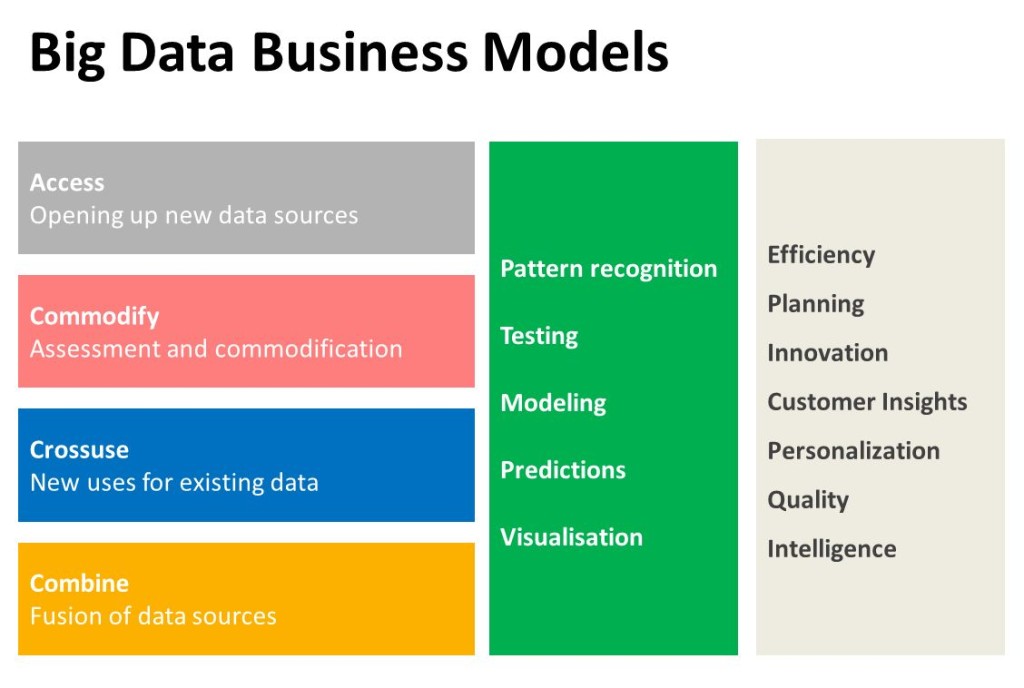

Data begs to be used.

Bruce Schneier, #unowned

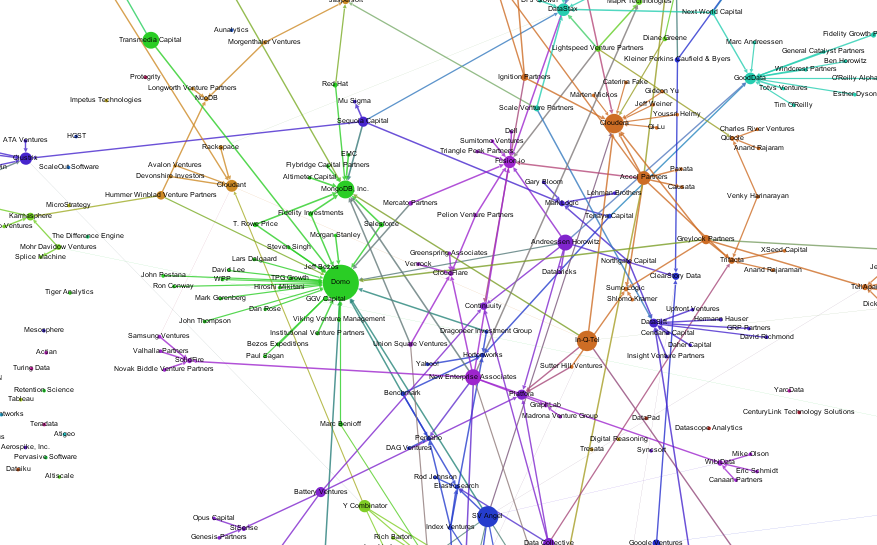

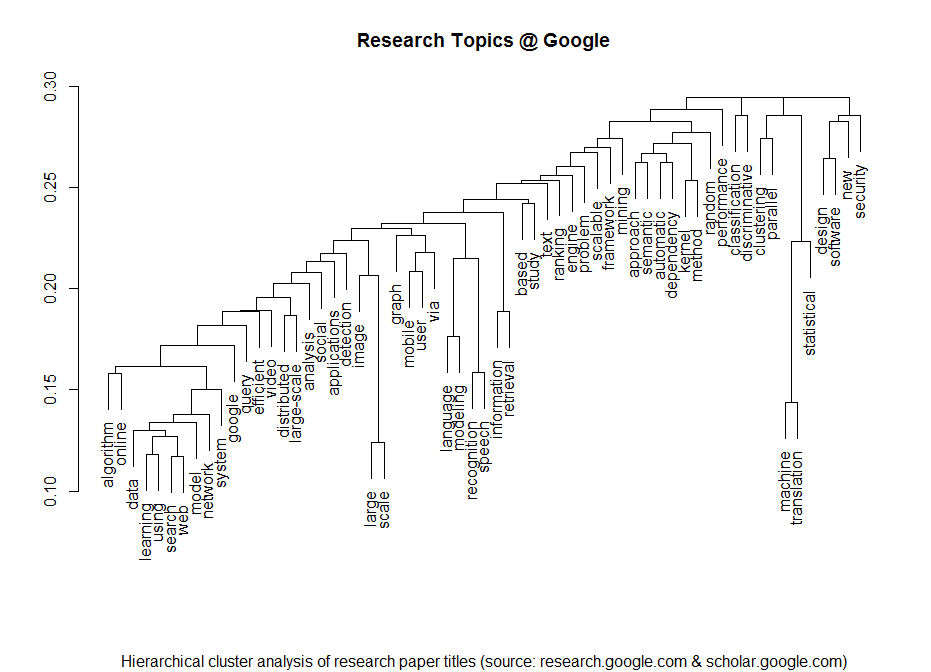

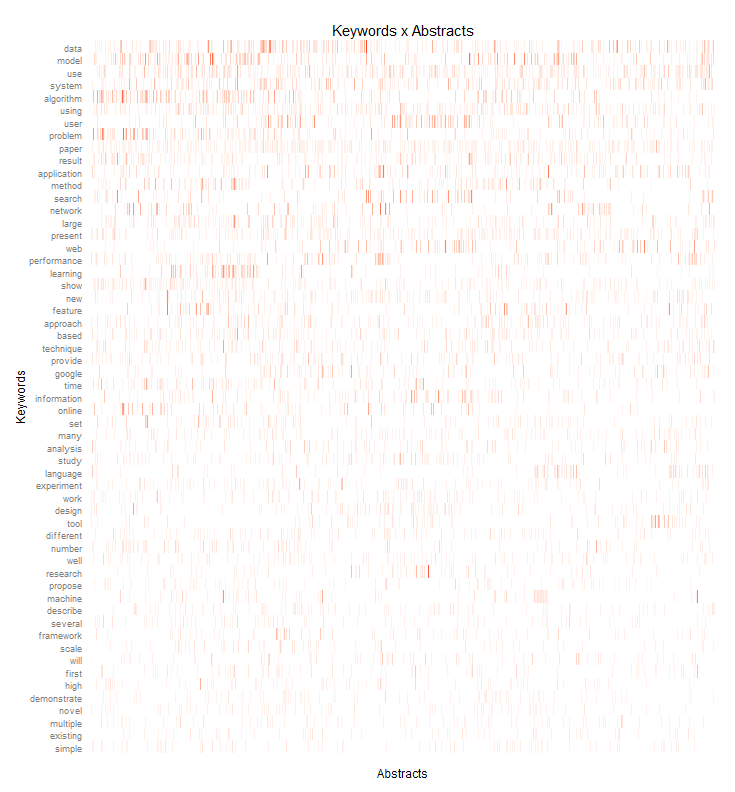

In “Snow Crash”, Neal Stephenson imagines a Central Intelligence Corporation, the CIA and NSA becoming a commercial service where everybody just purchases the information they’d need. This was, what came immediately to my mind when I read through the transcript of the talk on “Intelligence Gathering and the Unowned Internet” that was held by the Berkman Center for Internet and Society at Harvard, starring Bruce Schneier, who for every Mathematician in my generation is just the godfather of cryptography. Representatives of the intelligence community were also present. Bruce has been arguing for living “beyond fear” for more than a decade, advocating openness instead of digging trenches and winding up barbed wire. I am convinced, that information does not want to be free (as many of my comrades in arms tend to phrase). However I strongly belief Bruce is right: We can hear data’s call.

The Quantified Self, life-logging, self-tracking – many people making public even their most intimate data, like health, mood, or even our visual field of sight at any moment of our day. An increasingly strong urge to do this rises from social responsibility – health care, climate research, but also from quite profane uses like insurances offering discounts when you let your driving be tracked. So the data is there now, about everything there is to know. Not using it because of fear for privacy would be like having abandond the steam engine and abstinate from the industrialization in anticipation of the climate change.

Speak with us, don’t speak for us.

statement of autonomy of #OccupyWallStreet

Dinosaurs of modern warfare, like the NSA are already escamotating into dragons, fighting their death-match like Smaug at Lake Esgaroth. We have to deal with the dragon, kill it, but this is not, what we should set our political goal to. It will happen anyway.

We should take provision for what real changes we are going to face. We will find ourselves in a form of society that McLuhan would have called re-tribalized, or Tönnies would not have called society at all, but community. In a community, the concept of privacy is usually rather weak. But at the same time, there is no surveillance, no panoptic elevated watchmen who themself cannot be watched. Everyone is every other one’s keeper. Communal vigilance doesn’t sound like fun. However it will be the consequence of after-modern communal structures replacing the modern society. “In the electric world, we wear all mankind as our skin.”, so McLuhan speaketh.

Noi vogliamo cantare l’amor del pericolo.

M5S

Every aspect of life gets quantized, datarized, tangible by computers. Our aggregated models of human behavior get replaced by exact description of the single person’s life and thus the predictions of that person’s future actions. We witness the rise of non-representative democratic forms like occupy assemblies or liquid democracy. What room can privacy have in a world like this whatsoever? So maybe we should concentrate in shaping our data-laden future, rather then protecting a fantasy of data being contained in some “virtual reality” that could be kept separate from our lives.

The data calls us from the depth; let us hear to its voice!

Further reading:

Transcript of the discussion “The unowned Internet”

Declaration of Liquid Culture

Disrupt Politics!